New #preprint out! Binary Oxide Ceramics for Solar Cells: A Comparative & Bibliometric Analysis. We analyzed 50,000+ papers on TiO₂, ZnO, Al₂O₃, SiO₂, CeO₂, Fe₂O₃ & WO₃ in solar applications.

New #preprint out! Binary Oxide Ceramics for Solar Cells: A Comparative & Bibliometric Analysis. We analyzed 50,000+ papers on TiO₂, ZnO, Al₂O₃, SiO₂, CeO₂, Fe₂O₃ & WO₃ in solar applications.

Minor revisions!

...and apparently our method to derive competitive interaction strengths from empirical observation is considered "extremely elegant". That's a nice vacation start!

#AcademicChatter

#TheoreticalEcology

#EcologicalModelling

#PeerReview

#EcologicalNetworks

#preprint available here:

https://doi.org/10.1101/2024.01.25.577181

#Academia

#AI

#LLM

#LLMs

#AcademicChatter

I *love* #preprint servers, but biorxiv, medrxiv, and research square all said they don't take more opinion type papers.

Hat tip to @WiseWoman for suggesting #arXiv.

I can *FINALLY* say that it's officially released as a preprint.

Differentiating hype from practical applications of large language models in medicine - a primer for healthcare professionals.

https://arxiv.org/abs/2507.19567

DOI: 10.48550/arXiv.2507.19567

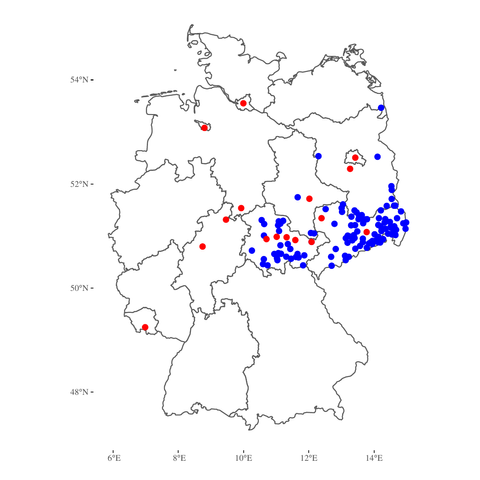

#Preprint: "Zuwanderung und der Zustand der Demokratie ... in den neuen Ländern sehr viel negativer bewertet als im Westen"

https://www.kai-arzheimer.com/paper/bundestagswahl-2021-ostdeutschland-linkspartei-afd/

"_Emerging, and rapidly accumulating, evidence indicates that agential AI may mirror, validate or amplify delusional or grandiose content, particularly in users already vulnerable to psychosis, due in part to the models’ design to maximise engagement and affirmation, although notably it is not clear whether these interactions have resulted or can result in the emergence of de novo psychosis in the absence of pre-existing vulnerability._"

Morrin, H., Nicholls, L., Levin, M., Yiend, J., Iyengar, U., DelGuidice, F., … Pollak, T. (2025, July 11). Delusions by design? How everyday AIs might be fuelling psychosis (and what can be done about it). https://doi.org/10.31234/osf.io/cmy7n_v5

#Preprint #AI #ArtificialIntelligence #Technology #Tech #Psychology #Neuroscience #Psychiatry @psychology

@benlockwood

High time that Plan U is widely implemented!

Plan U https://journals.plos.org/plosbiology/article?id=10.1371/journal.pbio.3000273

(Plan U: Universal access to scientific and medical research via funder #preprint mandates)

I will never understand why the authors of a manuscript that they post on a preprint server spontaneously decide that it will be better for whoever reads their manuscript to have not only all the figures at the end, but also separated from the legends?

WHY

(Same question for papers sent to review btw. Most journals allow for the format of your choice for the first submission. WHY not make it a nice, easily readable format??)

What is a Preprint and Why Should I Care?

New #preprint by Alex Koplenig and me:

"Statistical errors undermine claims about the evolution of polysynthetic languages". (https://doi.org/10.31219/osf.io/g72hw_v1)

This is a comment on Bromham et al. (2025): "Macroevolutionary analysis of polysynthesis..." published in #PNAS (https://doi.org/10.1073/pnas.2504483122).

In a nutshell: Statistical models that fit the data better call almost all reported results into question.

- Most structure is due to phylogenetic and geographic clustering.

- Neither spatial nor phylogenetic isolation is significant.

- L1 population only partially significant, but effect direction is reversed.

mRNA 3′UTRs chaperone intrinsically disordered regions to control protein activity.

#RNA #mRNA #3UTR #IntrinsicallyDisorderedRegions #IDRs #IntrinsicallyDisorderedProteins #Chaperones #Preprint

New #preprint online: Reproduction and replication of @benmarwick (2025), with data from @OpenAlex Accessible as interactive html version: https://aqueff.github.io/replication_Marwick2025_OpenAlex/

and more traditional manuscript with doi here: https://doi.org/10.31235/osf.io/97a6q_v1

Is #archaeology a hard or soft science?

Trop gros, trop cher, trop moche..., le système de publications scientifiques est à bout de souffle. Un article de "niche" pour passer un bon été :https://www.lemonde.fr/sciences/article/2025/07/07/le-monde-des-revues-scientifiques-au-bord-de-l-asphyxie_6619660_1650684.html

(allez au bout car il y a des messages d'espoirs...)

et en bonus, un "appui" sur l'usage de l'IA qui n'arrange rien,

https://www.lemonde.fr/sciences/article/2025/07/07/comment-l-ia-bouscule-les-publications-scientifiques_6619655_1650684.html

#science #preprint #recherche #pci #pubpeer #matilda #openaccess #retraction

@BorisBarbour @enroweb @ElisabethBik

The journal's version: https://doi.org/10.5840/resphilosophica2711

The full issue: https://pdcnet.org/collection-anonymous/browse?fp=resphilosophica&fq=resphilosophica%2FVolume%2F8898%7C102%2F8997%7CIssue%3A+3%2F

movetrack: An R package for modeling flight paths from radio-telemetry networks

Sciety uses our #metadata to retrieve #preprint titles, author info, and version histories—making evaluations easier to connect and display.

Insights from Giorgio Sironi and Mark Williams (eLife): https://tinyurl.com/5yrz3ff2

'On the Comparative Analysis of Methods for Solving the Blasius Boundary Layer Problem of #CompressibleFlow' - a UnisaRxiv #Preprint #Research article open for review on #ScienceOpen:

New #SpotLight episode!

Ryan, @stefanfwirth and Reinier talk with Katelyn Cooper, first author of a groundbreaking preprint—the first in-depth analysis of the LGBTQ+ climate in the biological sciences.

"_Over four months, LLM users consistently underperformed at neural, linguistic, and behavioral levels. These results raise concerns about the long-term educational implications of LLM reliance and underscore the need for deeper inquiry into AI's role in learning._"

Kosmyna, N. et al. (2025) Your Brain on ChatGPT: Accumulation of Cognitive Debt when Using an AI Assistant for Essay Writing Task. https://arxiv.org/abs/2506.08872.

#Preprint #AI #ArtificialIntelligence #LLM #LLMS #ComputerScience #Technology #Tech #Research #Learning #Education @ai

"_In our research, we uncovered a universal jailbreak attack that effectively compromises multiple state-of-the-art models, enabling them to answer almost any question and produce harmful outputs upon request._"

Fire, M. et al. (2025) Dark LLMs: The growing threat of unaligned AI models. https://arxiv.org/abs/2505.10066.

#AI #ArtificialIntelligence #LLMS #DarkLLMS #Technology #Tech #Preprint #Research #ComputerScience @ai