I'll be chatting with the good folks at #3RRR #ByteIntoIt tomorrow evening 7.30pm-ish, all about the #TokenWars and the race for data to train #AI models - with Vanessa Toholka and @floreani and crew.

Looking forward to it!

I'll be chatting with the good folks at #3RRR #ByteIntoIt tomorrow evening 7.30pm-ish, all about the #TokenWars and the race for data to train #AI models - with Vanessa Toholka and @floreani and crew.

Looking forward to it!

Here's a lovely piece by my #ANU #Cybernetics colleague, @theEllamo which talks about my #TokenWars talk, and how it's related to concepts like #PeakToken and the value of human-generated #data as the internet becomes polluted by #AI-generated slop.

There's a video link here to the #TokenWars talk, if you haven't seen it already.

Thanks, Ella!

Great post from Will Sinatra on the assemblage of tools he is using to block bots and scrapers

I recently had the opportunity to present at the Melbourne #ML and #AI Meetup on the topic of the #TokenWars - the resource conflict over data being harvested to train AI models like #LLMs - and the alateral damage this conflict is causing to the open web.

With a huge thanks to Jaime Blackwell you can now see the video here:

https://www.youtube.com/watch?v=C86Y3mXnsNI

Huge thanks to Lizzie Silver for all her behind the scenes work and to @jonoxer for making the connections.

Check out the Meetup at:

Opinion of the day:

The reason OpenAI wants a browser, or a social network, IMHO, is so they can have more training data - more tokens - for their models.

We have reached a point where we are in the Token Crisis - LLMs have been trained on all the publicly available data in the world, and it's costing OpenAI millions to licence more data.

It's cheaper to have that data, those tokens, produced for free by people who interact on social media or who use a browser. Data is driving these decisions.

ICYMI: I'll be talking at the Melbourne #ML and #AI Meetup in a couple weeks' time about the #TokenWars - the conflict for data to train LLMs and the fight by IP rights holders to protect their data from scrapers.

Come learn about how #LLMs are trained on huge volumes of tokens with transformers, why those tokens are becoming more economically valuable, and what you can do to protect your token treasure.

You'll never look at ChatGPT or data the same way again.

Huge thanks to @jonoxer for the recommend, and to Lizzie Silver for the behind the scenes wrangling.

https://www.meetup.com/machine-learning-ai-meetup/events/306548300

If you weren't able to make @everythingopen in Adelaide in January but were still keen to catch my talk on the #TokenWars in #ML - the hunt for real, human data amidst a sea of AI-generated slop - then don't despair!

I'm delighted to be giving this talk again at the Melbourne ML and AI meetup in mid-April - with thanks to Lizzie Silver for the behind the scenes organisation and to Jonathan Oxer for making the connection.

Seats are strictly limited - so sign up as soon as you can!

Talk Title: The Token Wars: why not all our content should be open

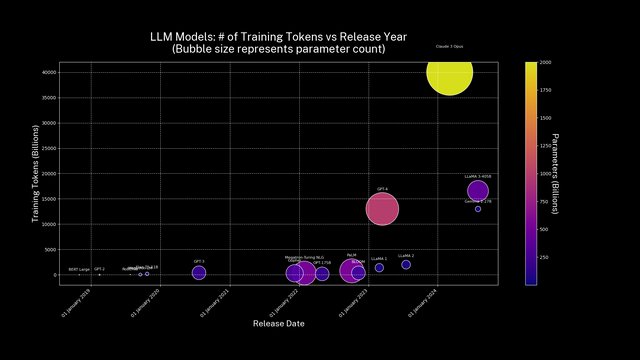

Abstract: In recent years, there has been an explosion in generative AI. Most of us are now familiar with tools like ChatGPT, Midjourney, Sora, and others. At the heart of generative AI is a machine learning architecture called the "transformer", which is fed by huge datasets - text, images and videos. Those datasets are "tokenised" - cut up into chunks which the transformer can ingest. Those actors who can obtain the most tokens can generally train the best models (for various values of "best").

We are now witnessing a battle between the creators of generative AI models - who seek to obtain as much data as possible for tokenisation - while their targets try to stop them. The social ramifications of this resource conflict are widespread, resulting in "alateral damage" - a term I am coining to point to the unforeseen, unintended, distal consequences of a seemingly innocuous technology.

These are the Token Wars.

And they're the reason not all our content should be openly available.

In this three-part talk, I first provide a technical grounding on transformers, tokens and how they're used to build text-based generative AI. In the second part, I draw on economics to ask, "why are tokens so valuable?", showing that as the internet becomes filled with AI slop, human-created data is becoming more scarce - and so more expensive. In the third part I explore how you might approach guarding your token treasure, from data poisoning to alternative licensing models and data sovereignty.

You'll leave this talk never looking at data or ChatGPT the same way again.

In just a few days I will travel to Tarntanya/Adelaide - fulfilling a desire to take the Overland across north-Western Victoria and into South Australia - to present my talk on the #TokenWars at @everythingopen #EO2025 #EverythingOpen.

The future of many open source, volunteer-run conferences is precarious.

Rising costs of hosting, dwindling sponsorship, and reluctance to fund employees to attend, as well as the increasing burn-out of the dedicated folks who pitch in thousands of hours a year to make them happen - on top of the erosion caused by the pandemic - means that this may be the last year in many I get to catch up with the community I've come to call "my people" over the last 15 years.

So, let's make it a blast.

Three stellar #keynotes lead the proceedings - maker, technologist and Skill Seeker, @sjpiper145, critical technologist and FOI expert, @daedalus, alongside passionate advocate for the power of libraries, @Trishh.

On top of that, I'm also anticipating great talks from Andy Gelme, @Unixbigot, @saera, @nnye, @dtbell91, @emmadavidson, @kattekrab @caitelatte@cloudisland.nz Aleisha Amohia and Sara King, just to name a few - people I have admired and respected for a long time.

See you there, perhaps for the last time in a long while?

You might be familiar with what I'm terming the "Token Wars" - in which #LLM and #GenAI companies seek to ingest text, image, audio and video content to create their #ML models. Tokens are the basic unit of data input into these models - meaning that #scraping of web content is widespread.

In retaliation, many sites - such as Reddit, Inc. and Stack Overflow - are entering into content sharing deals with companies like OpenAI, or making their sites subscription only.

Another solution that has emerged recently is content blocking based on user agent. In web programming, the client requesting a web page identifies themself - usually as a browser or a bot.

User agents can be blocked by a website's robots.txt file - but only if the user agent respects the robots.txt protocol. Many web scrapers do not. Taking this a step further, network providers like Cloudflare are now offering solutions which block known token scraper bots at a a network level.

I've been playing with one of these solutions called #DarkVisitors for a couple weeks after learning it about it on The Sizzle and was **amazed** at how much traffic to my websites were bots, crawlers and content scrapers.

(No backhanders here, it's just a very insightful tool)